- Executive Summary

- Recommendation as Infrastructure

- The Rise of Deep Performance Recommenders

- Criteo’s Track Record of Innovation

- The Emergence of Recommendation Agents

- Shared DNA: LLMs and Recommendation Systems

- Why Alignment Is Harder, and More Valuable in Commerce

- Why LLMs Can’t Replace Recommendation Systems (Yet)

- The Hybrid Path: Performance-Tuned Recommendation Agents

- Closing the Loop: The Three-Stage Agent

- What This Means for the Market

By Flavian Vasile, Chief AI Architect, Criteo

Executive Summary

Recommendation systems have become the invisible engine of digital commerce, influencing what people see, buy, and ultimately, where money flows. What began as a feature is now core infrastructure that drives engagement, conversion, and growth.

The next evolution won’t come from better recommenders alone, but from hybrid systems that combine the precision of deep learning recommendation, fed by behavioral and transaction data, with the conversational intelligence of Large Language Models (LLMs). These systems will do more than predict; they’ll understand, reason, and explain, creating intelligent agents that guide consumers with both accuracy and trust.

No company is better positioned to lead this transformation than Criteo. With visibility into 720 million daily shoppers, $1 trillion in annual transactions, and a global network of 17,000 advertisers, Criteo has spent two decades perfecting large-scale performance recommendation. From breakthroughs like DeepKNN, MetaProd2Vec, and Causal Embeddings to cutting-edge work in counterfactual learning and causal modeling, we’ve helped establish the performance foundation the industry runs on.

Now, we’re laying the groundwork to bring performance and dialogue together in a single hybrid framework. These performance-tuned recommendation agents will interpret intent, retrieve optimized results, and explain their reasoning in natural language, closing the loop between relevance, results, and trust.

This is more than a technical shift. It’s a new foundation for commerce—one where AI drives discovery, trust fuels engagement, and hybrid systems define the winners.

Recommendation as Infrastructure

Recommendation systems—the AI engines and algorithms that suggest products on retail sites, show picks on Netflix, or playlists on Spotify—have quietly become the backbone of digital commerce. Most people don’t know the term, but they rely on these systems every day.

Our point of view is simple: recommendation is no longer a feature, it’s infrastructure. It shapes consumer choice, drives discovery, and guides consumer spending. For businesses, this means recommendation systems are not optional add-ons. They are core drivers of measurable outcomes: higher engagement, stronger conversion rates, greater loyalty, and more efficient marketing spend. In today’s economy, scalable performance in recommendation is not just helpful, it’s a competitive edge.

But the next chapter in recommendation will not be written by recommendation systems alone. It will be defined by hybrid systems that combine the measurable performance of recommendation systems with the conversational intelligence of LLMs, a technology that is quickly reshaping how consumers discover information and make decisions.

Consider OpenAI’s recent partnerships with Shopify and Etsy, which highlight how conversational AI is beginning to enter commerce. Their approach relies on structured product feeds, and even within that specification, merchants are asked to provide performance signals such as popularity scores and return rates to improve ranking. This is telling: even in conversational commerce, content alone is not enough. The system still needs outcome-based signals to decide which products to highlight.

These partnerships point in the right direction, but they stop short of scaling across the broader commerce ecosystem. In this piece, we’ll explain why hybrid systems are the future, and why Criteo’s expertise and scale uniquely positions us to build them.

The Rise of Deep Performance Recommenders

Not all recommendation systems are created equal. The first recommenders focused on simple patterns, such as “people who bought this also bought that.” They relied on rules or shallow models but struggled with complex consumer journeys and massive product catalogs.

Over the past decade, a new class of systems has emerged: Deep Performance Recommenders. These models use deep learning to capture far richer patterns in consumer behavior. Instead of just co-purchase signals, they learn from vast sequences of interactions (clicks, searches, purchases, etc.) across billions of events. They optimize directly for advanced business objectives like sales ranking accuracy, ROI, and customer lifetime value, not just for relevance. At scale, they offer something rare: predictable performance. Given enough data, they improve outcomes reliably and measurably, making them a foundation businesses can trust.

Criteo has been a pioneer here, releasing landmark datasets, advancing embedding-based models, and winning recognition for production systems like DeepKNN, our proprietary deep learning recommendation system.

Criteo’s Track Record of Innovation

Over the last two decades, Criteo has forged performance recommendation at scale. We see 720M daily shoppers and $1 trillion in annual commerce transactions. Our integrations span 17,000 advertiser clients globally, including 230 retailers and 4,000 brands. This breadth ensures our recommendation systems are trained and deployed across the entire commerce ecosystem, connecting retailers, brands, and platforms in a true many-to-many architecture.

Beyond just scale, we became early adopters of product embeddings with proposals such as MetaProd2Vec, released the landmark 1TB Click Logs Dataset, and built award-winning production systems such as DeepKNN (SBR Technology Excellence Award, 2024). Our applied breakthroughs are continually reinforced by theoretical contributions at top venues, including NeurIPS, ICML, ICLR and RecSys (see Criteo AI Lab publications).

A key differentiator has been our leadership in performance-optimized recommendation. By learning directly from user behavior in live environments, our systems continuously adapt and improve, delivering product recommendations that maximize both immediate engagement and long-term value. From early advances like Causal Embeddings (’18) and Distributionally Robust Counterfactual Learning (’20) to today’s state-of-the-art off-policy evaluation and learning methods (’24), these are just a few of the projects through which we’ve shown how training recommendation systems directly on commerce signals can transform logged interactions into smarter, more adaptive, and deeply personalized outcomes.

We’ve also shaped the global research agenda through long-running workshops like REVEAL (2018 – 2022) and CONSEQUENCES (2022-present), advancing methods in causality, counterfactual evaluation, sequential decision-making, and long-term value modeling in recommender systems.

The Emergence of Recommendation Agents

Meanwhile, LLMs have opened a new frontier. Their strength lies in conversation: they can interpret complex requests, ask clarifying questions, and explain their choices in natural language. This makes them engaging and transparent, qualities that today’s recommendation systems often lack.

However, LLMs on their own aren’t built for commerce. They don’t connect to shopping data, and they don’t optimize for business outcomes. Their strength is in conversation and explanation, which makes them the perfect complement to recommendation systems. That’s why we believe the future of commerce recommendation is hybrid.

Recommendation systems deliver performance and scale. LLMs bring dialogue and trust. Together they create systems that are both effective and explainable. And this is exactly where Criteo is focused on: building performance-tuned recommendation agents that unite recommendation systems and LLMs into a new foundation for commerce.

Shared DNA: LLMs and Recommendation Systems

At first sight, recommendation systems and LLMs may look like two very different technologies. Yet at their core, they are both sequence models trained on massive logs of human behavior data.

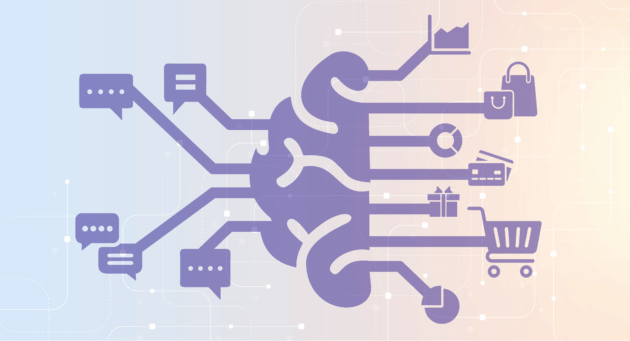

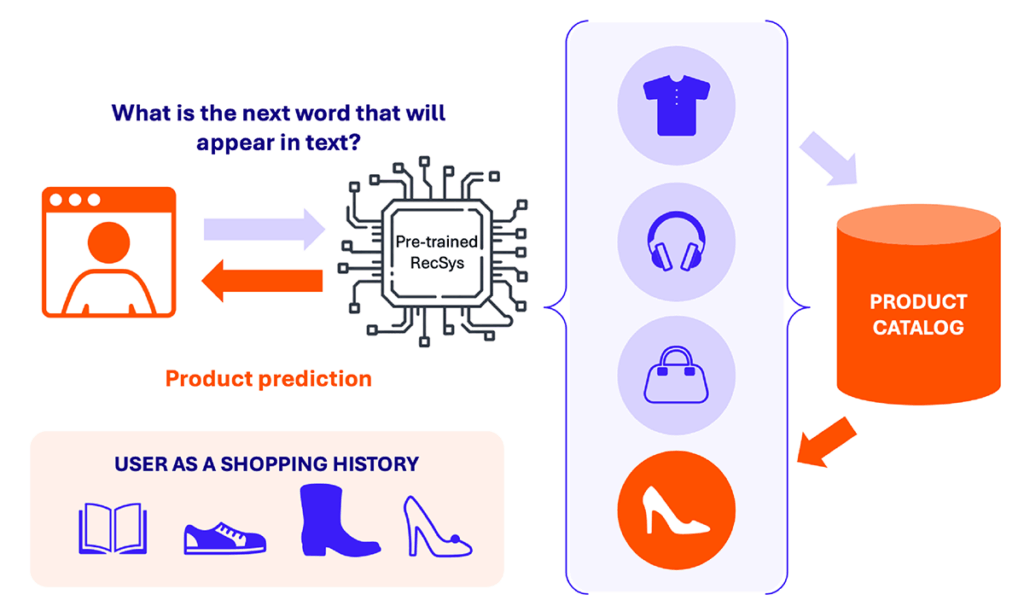

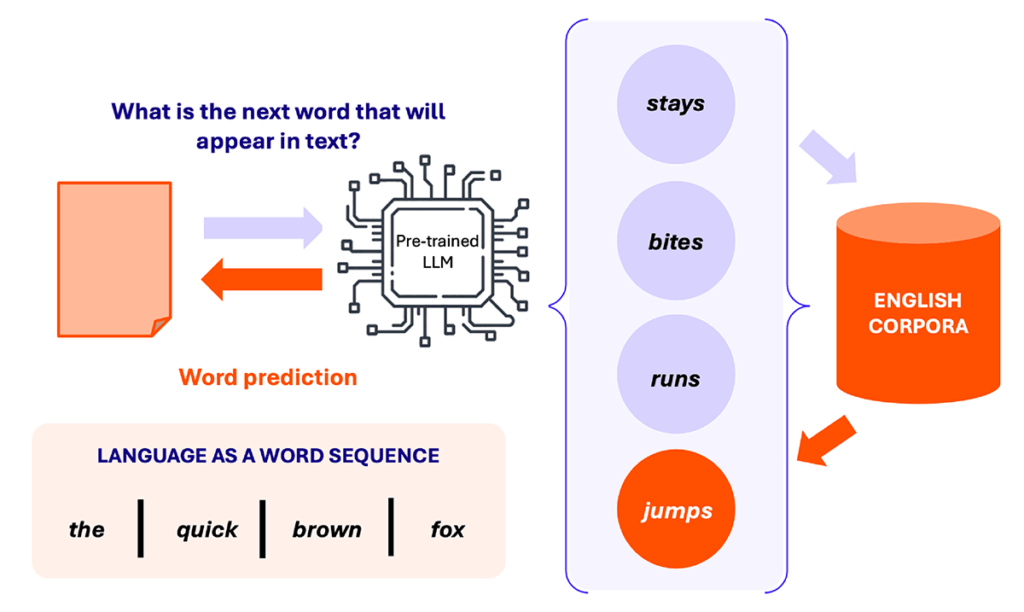

The following two diagrams (Figure 1 & 2) illustrate the conceptual symmetry between recommendation systems and LLMs. Both are sequence models trained on massive human behavior logs—one predicts the next product, the other the next word.

Figure 1: Recommendation System as a Shopping Sequence Predictor: In a recommendation system, the user’s browsing or shopping history is treated as a sequence of product interactions. A pre-trained recommender model learns to predict the next product the user is most likely to visit or buy, drawing from a vast and ever-changing product catalog. Each click or purchase refines the user’s behavioral “language,” helping the system learn patterns of intent and preference over time.

Figure 2: Large Language Model as a Text Sequence Predictor: In a language model, sentences are treated as sequences of words. A pre-trained LLM predicts the next word given the previous context, using patterns learned from massive text corpora. Just as a recommender predicts the next relevant item, the LLM extends meaning through linguistic continuity, optimizing for coherence and relevance.

This similarity is what makes recommendation systems particularly well positioned to understand, adapt, and extend the LLM paradigm. It also explains why combining the two is not just possible, but natural. Both follow the same two phases:

- Pre-training builds a broad “map of the world,” predicting the next word or the next relevant product.

- Fine-tuning adapts that map to specific goals. LLMs use human preference judgments, as shown in OpenAI’s research on aligning ChatGPT with reinforcement learning from human feedback (Ouyang et al., 2022). Recommendation systems use business signals like clicks and conversions, drawing on methods such as Bayesian Personalized Ranking (Rendle et al., 2009).

Because they share this foundation, practitioners in either field can cross over with ease. For companies with deep recommendation system expertise, this is a strategic advantage.

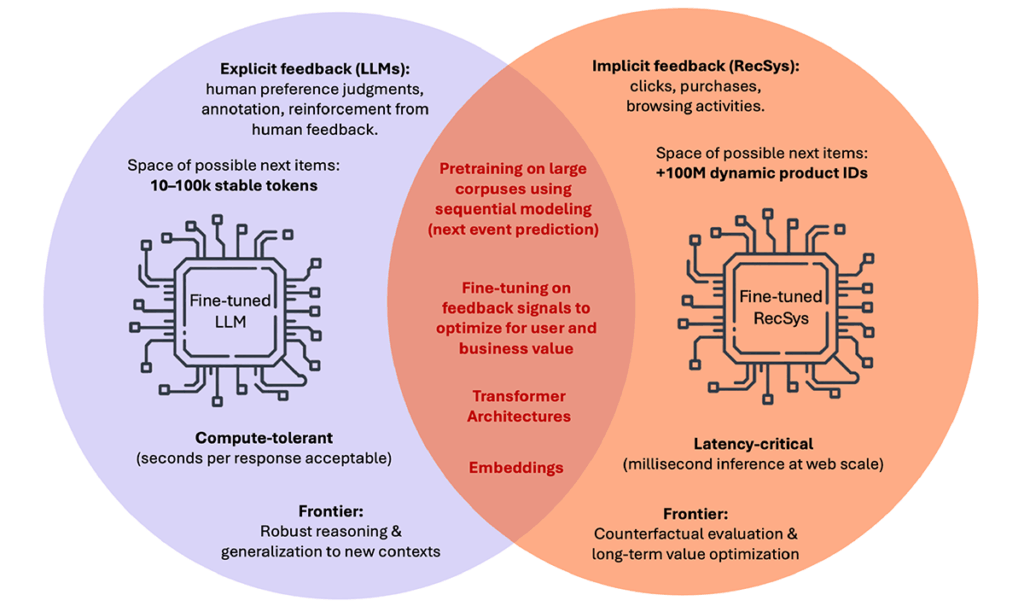

Figure 3: Shared DNA, Different Challenges: LLMs and Recommenders are both sequence models built on embeddings, transformers, and fine-tuning—but diverge in their feedback signals, item spaces, and frontiers of research.

Why Alignment Is Harder, and More Valuable in Commerce

In both LLMs and recommender systems, fine-tuning or alignment is what makes a model truly useful—it adapts the model’s predictions to reflect what people want.

For LLMs, collecting alignment data is relatively straightforward. They rely on explicit feedback, where humans provide direct judgments about model outputs:

- Preference annotations. Annotators rank completions or rate quality, giving clear supervisory signals.

- Interactive choices. More recently, feedback is collected directly from chat interactions, where users choose one completion over another or provide corrective input.

- Clear intent. Because judgments are stated explicitly, the model knows exactly which response was preferred and can optimize accordingly.

For recommendation systems, collecting alignment data is far more complex. They rely on implicit feedback, where user behavior must be interpreted rather than stated directly, leading to multiple challenges:

- Missing and biased feedback. Outcomes are observed only for the items that are recommended—not for the many alternatives that could have been shown. To evaluate a new policy, systems must answer counterfactual questions such as: “What if I had shown Product B instead of A?”

- Unreliable signals. Even when feedback is observed, its meaning is ambiguous. Most displayed items receive no clicks, but whether that reflects irrelevance, poor timing, or lack of visibility is unclear. And when signals do arrive, they are often delayed. True value may only appear weeks later through purchases, subscriptions, or increased loyalty.

- Catalog size and churn. Feedback is spread thinly across a massive set of items that is continuously changing, as millions of products appear and disappear over time.

These differences (shown in Figure 3) make performance alignment in recommendation systems a fundamentally different problem from LLM alignment. While LLMs benefit from explicit preference data, recommendation systems must wrestle with missing and noisy signals, delayed outcomes, and massive, ever-changing catalogs.

Research on counterfactual learning from implicit feedback (Swaminathan & Joachims, 2015) underscores both the difficulty and the necessity of counterfactual evaluation and optimization for Recommendation. Criteo has been tackling these challenges head-on: pioneering off-policy evaluation (Gilotte A et al., WSDM 2018), introducing causal embeddings (Bonner & Vasile RecSys 2018), and advancing counterfactual learning for recommendation (Jeunen O et al, KDD 2020).

Building on this foundation, we have continued to push the frontier with contributions such as distributionally robust counterfactual risk minimization (Faury et al., AAAI 2020), fast offline policy optimization for large-scale recommendation (Sakhi et al., AAAI 2022), offline contextual bandits with guarantees (Sakhi et al., ICML 2023, Aouali et al., ICML 2023), and, most recently, pessimistic off-policy methods like logarithmic smoothing ( Sakhi, O., Aouali, I NeurIPS 2024). Together, this line of work has positioned Criteo as a leader in robust and theoretically grounded off-policy learning for recommendation—bridging the gap between principled evaluation and real-world scalability.

Why LLMs Can’t Replace Recommendation Systems (Yet)

Despite their promise, today’s LLMs face structural barriers in commerce recommendations:

- Catalog scale. Product catalogs dwarf language vocabularies and change constantly. LLMs need an external retrieval engine that can track churn and surface the right products at scale.

- Data and feedback. Fine-tuning requires live traffic, which is costly and slow. Signals like clicks and purchases are sparse, delayed, and noisy, making iteration cycles long and expensive.

- Nature of fine-tuning. Commerce feedback is mostly implicit and ambiguous. Aligning models requires counterfactual learning and bandit methods—far more complex than the supervised fine-tuning pipelines used for LLMs.

For now, this makes pure LLM recommenders impractical at scale. Their strengths—reasoning and explanation—complement, but don’t replace, the performance and scalability of recommendation systems.

These limitations are consistently reflected in empirical findings across the RecSys literature and our own work. As detailed in our meta-review Can LLMs Recommend as well as Modern RecSys? current LLMs alone cannot yet deliver performance-optimized recommendations comparable to specialized recommender architectures.

The Hybrid Path: Performance-Tuned Recommendation Agents

The future lies in hybrid systems. A foundational architecture for this approach is Retrieval-Augmented Generation (RAG), introduced by Lewis et al. (2020), which layers a retrieval engine over a generative model to ground outputs in relevant external data.

In the context of commerce recommendation, this architecture can be viewed through an agentic lens: the LLM acts as an intelligent agent that uses the performance-based recommender as a tool. It queries the recommender, retrieves its top candidates, and then reasons, refines, and explains those choices in natural language.

Concretely, the hybrid system operates in two tightly connected layers:

- A recommendation-system backbone that retrieves high-performing candidates optimized for business KPIs.

- An LLM reasoning layer that interprets intent, applies user-specific constraints such as “under $50” or “eco-friendly,” and generates natural-language explanations.

Together, these layers combine the scalability and performance of recommendation systems with the dialogue and interpretability of LLMs, forming the foundation of next-generation commerce recommendation.

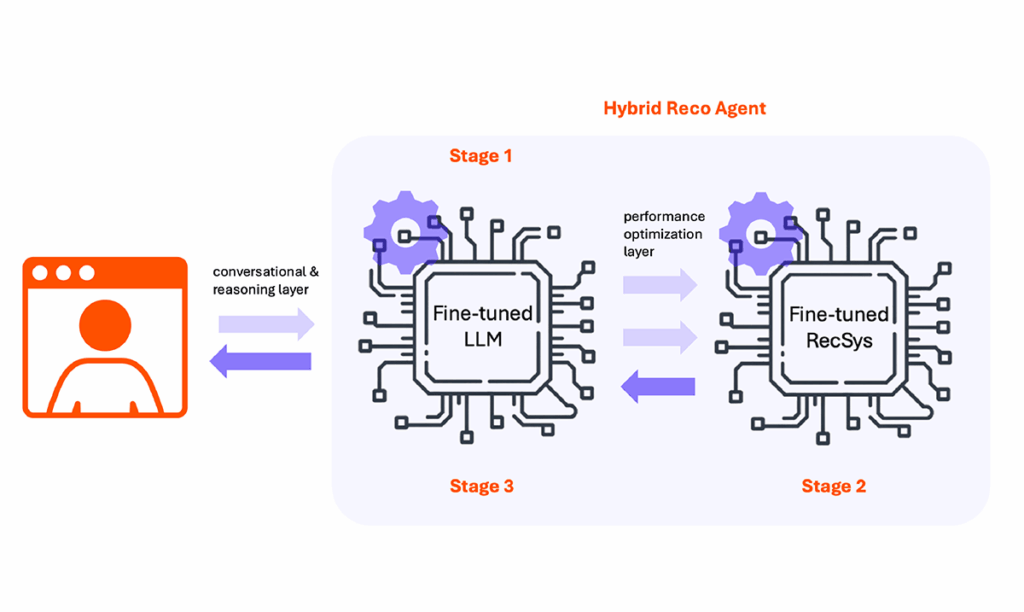

Figure 4: The three-stage hybrid agent: LLMs interpret intent and provide reasoning, while the recommendation system engine ensures performance-optimized retrieval and ranking—combining conversational intelligence with scalable optimization.

Closing the Loop: The Three-Stage Agent

As shown in Figure 4, the hybrid agent operates in three distinct stages:

- Understanding intent. The LLM interprets user needs and converts them into structured product queries.

- Retrieval and ranking. The recommendation system predicts the best-performing product candidates given the product query

- Reasoning and explanation. The LLM applies filters, balances trade-offs, and explains the choices.

Together, these stages form a feedback loop. Each user interaction improves the system, making recommendations more accurate and more trustworthy over time.

What This Means for the Market

The convergence of recommendation systems and LLMs could mark a pivotal shift in digital commerce. Each technology excels on a different axis. Recommendation systems deliver performance. LLMs offer reasoning. But neither is sufficient alone moving forward.

The hybrid model unlocks a new category: intelligent agents that are scalable, grounded in real-time data, and capable of transparent decision-making.

For the market, this signals two major shifts:

- Trust becomes a differentiator. As consumers demand transparency, systems that explain their choices will win engagement and regulatory favor.

- AI becomes a growth lever. Hybrid recommendation agents aren’t just infrastructure. They are strategic assets that drive measurable ROI across the funnel.

At Criteo, we are actively building all three stages of this cycle: intent understanding, candidate retrieval, and reasoning with explanations. Each interaction strengthens the loop, making recommendations smarter, more efficient, and more trustworthy over time. By combining performance-grounded retrieval with LLM-based reasoning, Criteo is creating the hybrid architecture that defines the next generation of commerce recommendation. This is not just a technical shift; it’s a new foundation for how businesses grow and how consumers make decisions.

But scale in commerce cannot thrive within silos. Walled gardens and one-to-one partnerships fragment the ecosystem. Criteo takes the opposite approach: a many-to-many architecture that connects retailers, brands, and platforms in a shared network. This marketplace effect compounds over time—every new participant strengthens outcomes for all.

That’s why Criteo is uniquely positioned to define the future of commerce recommendation: hybrid systems that are not only technologically advanced, but ecosystem-wide by design.

Want to know more? This vision is explored in depth in our companion piece, How RecSys & LLMs Will Converge: Architecture of Hybrid RecoAgents, which details the technical blueprint behind this next-generation ecosystem.